augmenting memory with LLMs

Here's a thrill: discovering how AI can fuse with time-tested learning techniques to push the boundaries of what we can remember.

I can get a little fixated on things—especially ones that excite me, particularly meta-skills that I think can advance me well beyond where I am now. Maybe that makes me a bit of a self-improvement junkie, but really my excitement is rooted in how new skills can enable me to get the most out of this short life and give something back to others.

LLMs have completely changed the game—they are so flexible and powerful, and their applications will be wide-ranging in how they can help us. The most common use cases I've seen have been in code completion, summarization, and content generation. That's all good, but I'm much more interested in how these tools can augment us as humans—how they can merge with traditional, ancient methodologies like practice, discipline, and learning, where we integrate knowledge into who we are.

I've taken a keen interest in meta-skills for learning, starting with memorization. I finished reading Higbee's book on memory, which outlines several different techniques for how to commit various kinds of information into memory. The book was written 30+ years ago, well before LLMs. I am so curious to see how I can apply his methodologies with LLMs.

Let's take a simple example. I want to commit different facts to memory, such as the altitude of a given mountain. I initially tried brute-force Anki memorization—question on one side of the card asking the altitude of a mountain, and the exact number on the other. This didn't work very well—I would quickly forget, and my retention wouldn't last beyond a month or two. The relevance and importance played a role here too—yes, I want to remember these, but these facts don't have massive value in themselves, so they're not worth spending a ton of time on. I want a low-effort, high-leverage means of committing these to memory. If my effort to memorize something goes way down due to a systematic approach that works, then it makes sense to commit a bunch of these facts to memory.

I couldn't remember exact numbers, so I would round them. Cusco is approximately 3400m in altitude. Machu Picchu 2400m, etc. This made retention easier, but it was still prone to error.

So here's where I got excited about the phonetic system of memorization. The phonetic system is a memory technique where numbers are converted into consonant sounds based on specific rules. Those sounds are then turned into words by adding vowels. For example, the number 33 becomes "MM" (the consonants for 3 are "M") and is then turned into a word like "Miami." Similarly, 99 becomes "PP," which can be turned into "puppy." The idea is to create vivid, memorable images that help you recall the numbers. Now I could associate images with random numbers and what those numbers relate to.

Conjuring our own images is great, but why not have LLMs do that work for us? We can use an appendix of words that align with values 00–100, generate one-line stories with those words, and then have the LLM create an image for us. We then add that to the Anki card for aided memorization.

So let's start with Cusco. Its exact altitude is 3399m. I used Miami (33) and puppy (99) as my mnemonic words. Great... so I had ChatGPT generate an image of a puppy in Cusco in a Miami Vice style:

It's pretty remarkable how easily the mind remembers images—so now when I want to recall the exact altitude, I recall this image, the sequence "Miami puppy," and then the numbers 3399. As I get more practice iterations, I see that I no longer need to calculate the mapping of Miami to 33 or puppy to 99—the number sequence emerges naturally as 3399 when I think of the image.

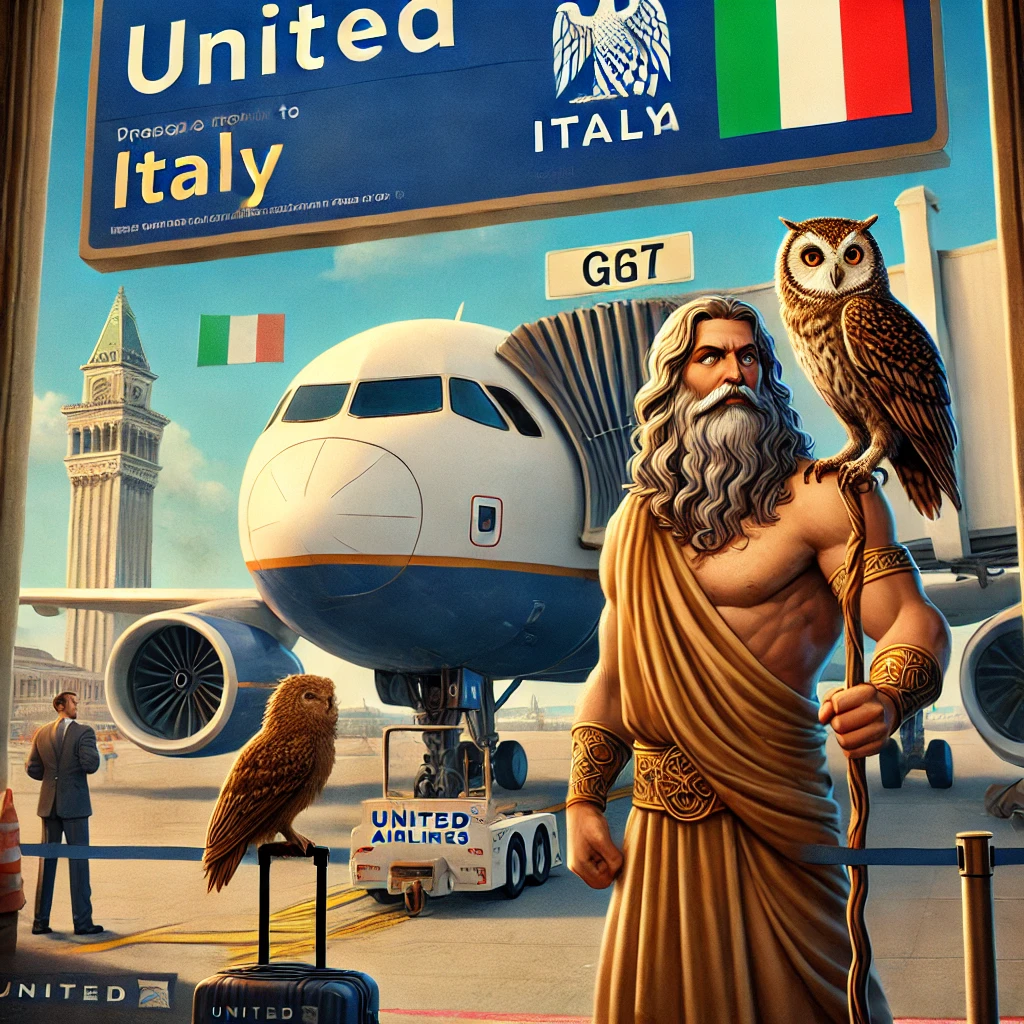

So you can see I'm a little excited about how I can apply this to remember anything I no longer want to forget. Some of it's silly, like remembering that the conversion rate from dollars to United Airlines points is 0.0145. So I look at my index, find the words Zeus (00), Italy (14), and Owl (5), and construct a one-sentence story: Zeus travels to Italy with his owl on United Airlines. And here's where the LLM comes in:

There are still several manual steps here. I am thinking of ways I can automate this process with a single input—the number itself would output the sequence of words via index lookups and then create an image.

This kind of stuff really excites me: using LLMs to augment ourselves, to enable us to leverage them to expedite our practice, to have the generative power of elements be the consort to our discipline. What a world we live in.